Tools for the 21st century musician

Soledad Penadés

@supersole

Let’s start with a supposition:

You all are musicians or artists

and very edgy!

So you want to break fronteers;

go where no one has been before:

bring your art to the web!

Oh, I know, with <audio>, eh?

We could use it...

<audio src="cool_song.ogg" controls preload></audio>

This would...

- initiate network request for loading

- deal with decoding/streaming/buffering

- render audio controls

- display progress indicator, time...

It could also trigger some events!

- loadeddata

- error

- ended

- ... etc

And has methods you can use

- load

- play

- pause

But it has shortcomings...

- hard to accurately schedule

- triggering multiple instances of same sound requires a hack

- they're associated to a DOM element

- output goes straight to the speakers - no fancy visualisations

- in some systems the OS will display a fullscreen player overlay

This won’t do for edgy artists

Is it all over?

Do we just give up and start writing native apps?

NO

Web Audio

to the rescue!

Web Audio...

- is modular

- interoperable with other JS/Web APIs

- not attached to the DOM

- runs in a separate thread

- 2014: supported in many browsers!

So how does it work?

We create an audio context

var audioContext = new AudioContext();

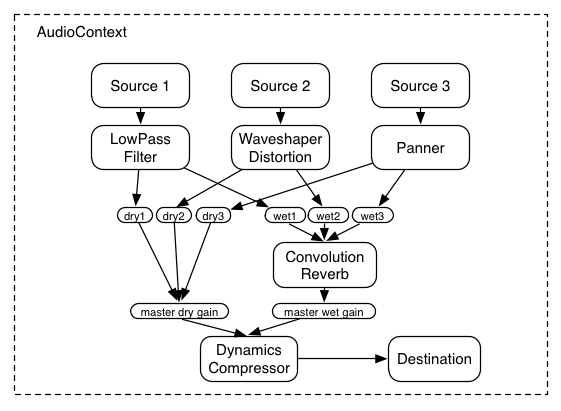

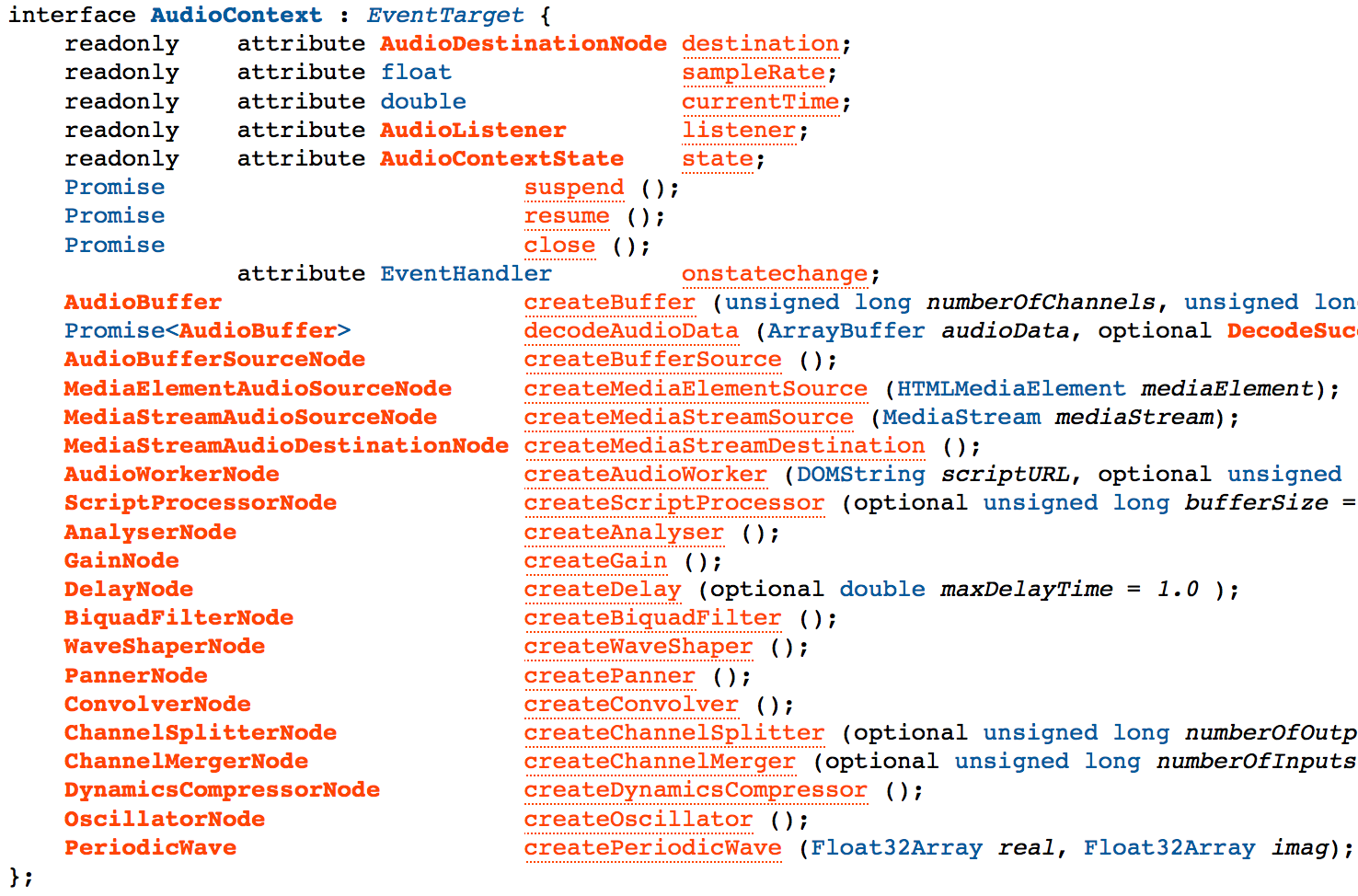

AudioContext

“Where everything happens”

AudioContext

- methods to create audio nodes

- some nodes generate audio

- others alter it

- others examine it

- they all form the audio graph

The audio graph? ô_Ô

Let’s make some noise

var audioContext = new AudioContext();

var oscillator = audioContext.createOscillator();

oscillator.connect(audioContext.destination);

oscillator.start(audioContext.currentTime);Starting/stopping

// start it now

oscillator.start(audioContext.currentTime);

// 3 seconds from now

oscillator.start(audioContext.currentTime + 3)

// stop it now

oscillator.stop(audioContext.currentTime);

// start it again

oscillator.start(audioContext.currentTime); // !?!

Why can’t oscillators be restarted?

Welcome to your first Web Audio...

GOTCHA!

Because of performance reasons

One-use only nodes

- use and forget

- automatically disposed of by the GC

- as long as you don't keep references

- watch out for those memory leaks!

- the Web Audio Editor is super helpful

Write your own wrappers

Oscillator.js (1/3)

function Oscillator(context) {

var node = null;

var nodeNeedsNulling = false;

this.start = function(when) {

ensureNodeIsLive();

node.start(when);

};

Oscillator.js (2/3)

// continues

this.stop = function(when) {

if(node === null) {

return;

}

nodeNeedsNulling = true;

node.stop(when);

};

Oscillator.js (3/3)

// continues

function ensureNodeIsLive() {

if(nodeNeedsNulling || node === null) {

node = context.createOscillator();

}

nodeNeedsNulling = false;

}

}

Using it

var ctx = new AudioContext();

var osc = new Oscillator(ctx);

function restart() {

osc.stop(0);

osc.start(0);

}

osc.start(0);

setTimeout(restart, 1000);

Self regenerating oscillator

But before I continue...

It would be nice to see what is going on!

Let’s use an

AnalyserNode

AnalyserNode, 1

var analyser = context.createAnalyser();

analyser.fftSize = 2048;

var analyserData = new Float32Array(

analyser.frequencyBinCount

);

oscillator.connect(analyser);

AnalyserNode, 2

requestAnimationFrame(animate);

function animate() {

analyser.getFloatTimeDomainData(analyserData);

drawSample(canvas, analyserData);

}

Analyser

Now,

can we play something other than that beep?

Yes!

Nodes have properties we can change, e.g.

oscillator.type

- sine

- square

- sawtooth

- triangle

- custom

oscillator.type = 'square';

Wave types

oscillator.frequency

Naive attempt:

oscillator.frequency = 880;It doesn’t work!😬

oscillator.frequency

is an AudioParam

It is special

// Access it with

oscillator.frequency.value = 880;So what is the point of AudioParam?

Superpowers.

Superpower #1

Scheduling changes with accurate timing

What NOT to do

- setInterval

- setTimeout

Stepped sounds

AudioParam approach

Web Audio keeps a list of timed events per parameter

- setValueAtTime

- linearRampToValueAtTime

- exponentialRampToValueAtTime

- setTargetAtTime

- setValueCurveAtTime

Go from 440 to 880 Hz in 3 seconds

osc.frequency.setValueAtTime(

440,

audioContext.currentTime

);

osc.frequency.linearRampToValueAtTime(

880,

audioContext.currentTime + 3

);Minigotchas

- avoid using param.value --it doesn’t add an event to the list

- avoid using 0 as when--times have to be ascending

Let’s build an ADSR envelope

ADSwhat...?

- Attack Decay Sustain Release

- Used a lot in substractive synthesis

- Relatively easy to configure and compute

We need a new node for controlling the volume

GainNode

var ctx = new AudioContext();

var osc = ctx.createOscillator();

var gain = ctx.createGain(); // *** NEW

osc.connect(gain); // *** NEW

gain.connect(ctx.destination); // *** NEW

ADSR part 1

// Attack/Decay/Sustain phase

gain.gain.setValueAtTime(

0,

audioContext.currentTime

);

gain.gain.linearRampToValueAtTime(

1,

audioContext.currentTime + attackLength

);

gain.gain.linearRampToValueAtTime(

sustainValue,

audioContext.currentTime + attackLength + decayLength

);

ADSR part 2

// Release phase

gain.gain.linearRampToValueAtTime(

0,

audioContext.currentTime + releaseLength

);

Envelopes

Cancelling events!

osc.frequency.cancelScheduledEvents(

audioContext.currentTime

);

Superpower #2

Modulation

Connect the output of one node to another node’s AudioParam

LFOs

LFOs

We can’t hear those frequencies...

but can use them to alter other values we can notice!

SPOOKY SOUNDS

Watch out!

var context = new AudioContext();

var osc = context.createOscillator();

var lfOsc = context.createOscillator();

var gain = context.createGain();

lfOsc.connect(gain);

// The output from gain is the [-1, 1] range

gain.gain.value = 100;

// now the output from gain is in the [-100, 100] range!

gain.connect(osc.frequency); // NOT the destination

KEEP watching out

osc.frequency.value = 440;

// oscillation frequency is 1Hz = once per second

lfOsc.frequency.value = 1;

osc.start();

lfOsc.start();

spooky LFOs

Playing existing samples

- AudioBufferSourceNode for short samples (< 1 min)

- MediaElementAudioSourceNode for longer sounds

AudioBufferSourceNode, 1

var context = new AudioContext();

var pewSource = context.createBufferSource();

var request = new XMLHttpRequest();

request.open('GET', samplePath, true);

request.responseType = 'arraybuffer'; // we want binary data 'as is'

request.onload = function() {

context.decodeAudioData(

request.response,

loadedCallback, errorCallback

);

};

AudioBufferSourceNode, 2

var abs = context.createBufferSource();

function loadedCallback(bufferSource) {

abs.buffer = bufferSource;

abs.start();

}

function errorCallback() {

alert('No PEW PEW for you');

}

AudioBufferSourceNode, 3

Just like oscillators!

abs.start(when);

abs.stop(when);

AudioBufferSourceNode even die like oscillators!

Pssst:

You can create them again and reuse the buffer

pewpewmatic

MediaElementAudioSourceNode

Takes the output of <audio> or <video> and incorporates them into the graph.

var video = document.querySelector('video');

var audioSourceNode =

context.createMediaElementAudioSource(

video

);

audioSourceNode.connect(context.destination);

Media element

More Web Audio nodes

- delay

- filter (low/pass/high frequencies)

- panning (3D sounds!)

- reverb (via convolver)

- splitter

- waveshaper

- compressor

Their parameters can also be modulated and automated!

Wait, there is more!

Mix all the APIs!

- Using getUserMedia + MediaElementAudioSourceNode

- Web Audio Workers - generate audio in realtime with JavaScript

- OfflineAudioContext - render as fast as possible! Beat detection, etc...

- ???

- BE EDGY!

And there is

STILL more!

I've been hacking on Web Audio stuff for the last 3 years

so I've done the same things over and over

in different ways

I've also spoken to many people about audio stuff

- Angelina Fabbro saw my <audio-tags> and said "hey this looks promising, let's do more of this!", so they created a GitHub org for "audio stuff"

But we didn't know what exactly to put in there... - Jordan Santell discussed his component based audio components.

- Max Ogden and his little modules made me understand "the Node Way"

At some point the stars aligned:

- I finally understood AudioParams

- I found the way to simulate custom audio nodes

- and I was going to speak about music in the 21st century

Suddenly everything made sense

It was, at last, the moment for...

(dramatic pause)

OpenMusic

Modules and components

for Web Audio

github.com/openmusic

OpenMusic right now:

- web components: oscilloscope, slider

- audio components: oscillator, sample player, clipper, dcbias

- eventing: tracker player

- audio generation: noise functions (white, brown, pink)

All based on npm, dependencies sorted out on npm install

How it looks like

var Oscillator = require('openmusic-oscillator');

var ac = new AudioContext();

var osc = Oscillator(ac);

osc.connect(ac.destination);

osc.start();i.e. pretty much like other AudioNodes

Principles

- behave like standard AudioNodes

- one functionality, one module

- composable

Our wish:

- People use these bits and pieces

- Or they look at them and build their own and we can use theirs

- Bits and pieces become tools

- A web audio ecosystem forms...